Previously at Ingenuity Cleveland

Ingenuity: StarLords (Big Pixel Display) Part 1

"There's always time for another project", as the sign in the back of the makerspace says. It was July of 2024, and Ed Morra had an interesting one: A giant display, but with low resolution, with pixels (all 18x18 = 324 of them) made from used gallon milk jugs. The low resolution would be a feature, not a bug, as it would run a retro-inspired video game with blocky elements.

Initially, Ingenuity leadership was lukewarm on the concept, but Ed went the extra mile, creating a concept render of the entire project in Blender:

This had the effect of completely selling the artistic director on the concept, and preparations began in earnest.

False Starts

Besides the interesting aesthetic, milk jugs were selected because we thought they would be easy to procure, but fate had other plans. Ed put out a call for members to bring in used jugs, figuring that most brands and types would be suitable, but this turned out not to be the case: The mounting board design that we had in mind relied on the presence of a lip on the nozzles of the Distillata water jugs used as a reference, which it turned out were absent on most other brands. A Plan B was devised in short order: a donation or sponsorship of unused jugs would be secured from Distillata themselves. Early overtures to this effect were also unsuccessful, but then a chance meeting with an executive [1] put us back on track. These were still of an incompatible screw-top design, though, so Ed and Sam designed an adapter, which we duly christened the Jug Nut:

The jugs would then be mounted nozzle-down in the frames, with an addressable LED placed in the nozzle of each jug and wired together as a string in a serpentine arrangment (traveling left across one row, then right across the next).

Meanwhile, the software effort was also exploring different alternatives: We started with the idea of using an ESP32, specifically the DigQuad by QuinLED, which takes care of a lot of the electrical hassles that commonly plague NeoPixel projects. We decided to start development in MicroPython, as we liked the ease of development and portability (and I was eager to add another skill to my toolbelt). However, two different issues presented themselves at about the same time, which led to a platform change:

- The MicroPython code began to run into performance limitations. We figured this might happen, but we thought porting to C++ wouldn't be too much trouble if necessary.

- Processing and routing audio from an ESP32, while possible, would be a significant task on its own requiring custom circuitry and significant software support.

Moving to a Raspberry Pi 3B+ solved both of these problems: the existing code would see a significant performance boost thanks to the faster processor and running under CPython, and the audio could use a standard desktop audio stack and built-in headphone jack, and we were soon on our way toward a working product:

I give Ed (filming) an initial demonstration of the game

Software Approach

Going into the project, I didn't have much experience with game development, but I knew that separation of purpose was important for creating a codebase that wouldn't become a ball of spaghetti. I started out with separate update() and render() functions, with the former updating the game's "world state" (as stored in a GameState object) and the latter performing the display rendering and audio playback. This also made it fairly simple to implement sub-ticks (multiple physics/state updates per rendered frame), which was necessary to keep ball motion accurate.

I/O related functionality was implemented in the hardware subpackage, which used an abstract base class approach in many of the modules to allow different implementations to be seamlessly swapped out. This was mainly used to create simple simulated hardware for local testing and debugging (for instance, there is a PrintDisplay class which prints the display in a simple ASCII format to the console), but it's also useful for general extensibility of the design; for instance, we experimented with driving the display over the ArtNet protocol, so I created artnet_display.py, which implements the common Display class functionality and required no changes to the rendering logic in starlords.py.

The main programming challenge was implementing the basic physics required to drive the game, especially since I wanted the ball to behave as a circular ball which would bounce at different angles from the corners of rectangular blocks. The required collision detection can be described as a special case of the Separating Axis Theorem (SAT), and happens in two phases. The first phase checks whether any corners of rectangular colliders have entered the circle, registering a collision with a normal vector pointing toward the center of the ball:

def _circle_rectangle_collision(circle_pos: v2, circle_radius: float, rectangle_pos: v2, rectangle_size: v2):

# Find the distance to the closest of the four corners of the rectangle

corners = [rectangle_pos, v2(rectangle_pos.x + rectangle_size.x, rectangle_pos.y),

rectangle_pos + rectangle_size,

v2(rectangle_pos.x, rectangle_pos.y + rectangle_size.y)]

closest_corner, dist = min(((corner, (corner - circle_pos).length2())

for corner in corners), key=lambda x: x[1])

dist = math.sqrt(dist)

if dist <= circle_radius:

# Find the two intersection points between the edges of the circle and rectangle.

# We use the relation y = [plus or minus] sqrt(r^2 - x^2)

abs_intersect_x = math.sqrt(

circle_radius ** 2 - (closest_corner.y - circle_pos.y) ** 2)

abs_intersect_y = math.sqrt(

circle_radius ** 2 - (closest_corner.x - circle_pos.x) ** 2)

# Convert the x and y intersections to points, resolving the [plus or minus] by checking which side

# of the circle the intersection was.

ix_point = v2(circle_pos.x + (

-abs_intersect_x if closest_corner.x < circle_pos.x else abs_intersect_x), closest_corner.y)

iy_point = v2(closest_corner.x, circle_pos.y + (

-abs_intersect_y if closest_corner.y < circle_pos.y else abs_intersect_y))

# Take the vector from the midpoint of the intersect points through the center of the circle, and

# normalize it to a unit vector.

normal_vector = circle_pos - (ix_point + iy_point) / 2.0

unit_normal_vector: Vector2 = normal_vector / normal_vector.length()

return unit_normal_vector

The second case involves checking whether the ball has entered the sides of the rectangle, registering a collision in the direction of the nearest side:

which is implemented like this:

# Continuing from the block above: Check whether the center of the circle is in

# one of the shaded regions above (and thus intersects a side).

elif (circle_pos.x + circle_radius >= rectangle_pos.x and

circle_pos.x - circle_radius <= rectangle_pos.x + rectangle_size.x and

circle_pos.y >= rectangle_pos.y and

circle_pos.y <= rectangle_pos.y + rectangle_size.y

) or (

circle_pos.x >= rectangle_pos.x and

circle_pos.x <= rectangle_pos.x + rectangle_size.x and

circle_pos.y + circle_radius >= rectangle_pos.y and

circle_pos.y - circle_radius <= rectangle_pos.y + rectangle_size.y

):

# Determine which side is closest, and return a normal vector pointing out

# from that side.

delta = circle_pos - (rectangle_pos + rectangle_size / 2.0)

if abs(delta.x) >= abs(delta.y):

normal_vector = v2(-1.0 if delta.x < 0.0 else 1.0, 0.0)

else:

normal_vector = v2(0.0, -1.0 if delta.y < 0.0 else 1.0)

return normal_vector

These normal vectors are used to directly reflect the ball's velocity vector; that is, we project the velocity along the normal vector and reverse only that component, preserving the perpendicular component:

Audio playback, although easier on the Pi, still took a bit of work, especially because I wanted something a bit more sophisticated than exec("aplay foo.mp3"). I came up with the following design:

# This is called periodically by sounddevice, which expects output to be filled with num_frames of audio data.

def _stream_callback(self, output: 'cffi.FFI.buffer', num_frames: int, time: 'cffi.FFI.CData', status: sd.CallbackFlags):

output_start = 0

while output_start < len(output):

if self._curr_sample is None:

try: # Get the name/enum value from the queue (added to the queue by the play() function)

self._curr_sample = self._samples_to_play.get_nowait()

self._curr_sample_offset = 0

except queue.Empty: # If there is none, pad the remaining output with zero bytes (silence) and return.

output[output_start:] = b'\0' * (len(output) - output_start)

return

sample_data = self._sample_cache[self._curr_sample.value][0] # Get the reference to the bytes for this sample (all samples are loaded at __init__).

bytes_to_write = min(len(sample_data) - self._curr_sample_offset, len(output) - output_start) # Write until the end of the sample or the end of the output buffer, whichever comes first.

output[output_start:output_start + bytes_to_write] = sample_data[self._curr_sample_offset:self._curr_sample_offset + bytes_to_write]

output_start += bytes_to_write

self._curr_sample_offset += output_start

# If we've reached the end of the current sample, loop to the beginning if looping is enabled or clear the reference for the next sample to be loaded.

if self._curr_sample_offset >= len(sample_data):

if self.loop:

self._curr_sample_offset = 0

else:

self._curr_sample = None

self._curr_sample_offset = None

return

This is relatively basic, in that it just performs byte-level copying and not any actual sound mixing, but it supported the features we needed, including playing sounds sequentially (such as ready player one through four), looping sounds (for the idle ambient track), and interrupting sounds with others (for gameplay SFX).

The sound effects themselves, supplied by Ed, are small .wav files that are all loaded into memory at startup using Python's built-in wave module. The "ready player" sounds (triggered when each player first presses their controller button) and the "it begins" sound (triggered when all players are ready and the countdown starts) are recordings of Ed's voice, with some post-processing applied to give it more of a booming, announcer-like quality.

Getting to MVP

While all this software was being written, Ed wanted a fallback in case it wasn't ready in time. He used WLED for this, as it provides an ample assortment of animations out-of-the-box that quickly created an interesting display:

9/11/24: Rainbow waves generated by WLED are mesmerizing on the jug panel. Credit: Ed Morra

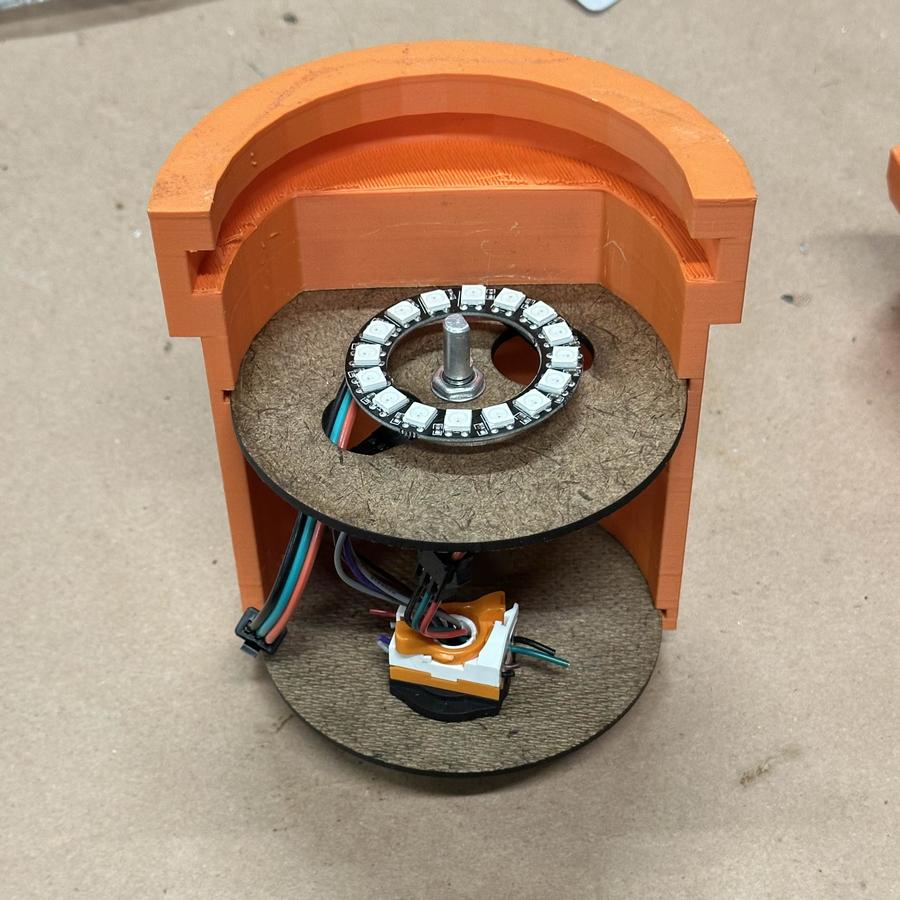

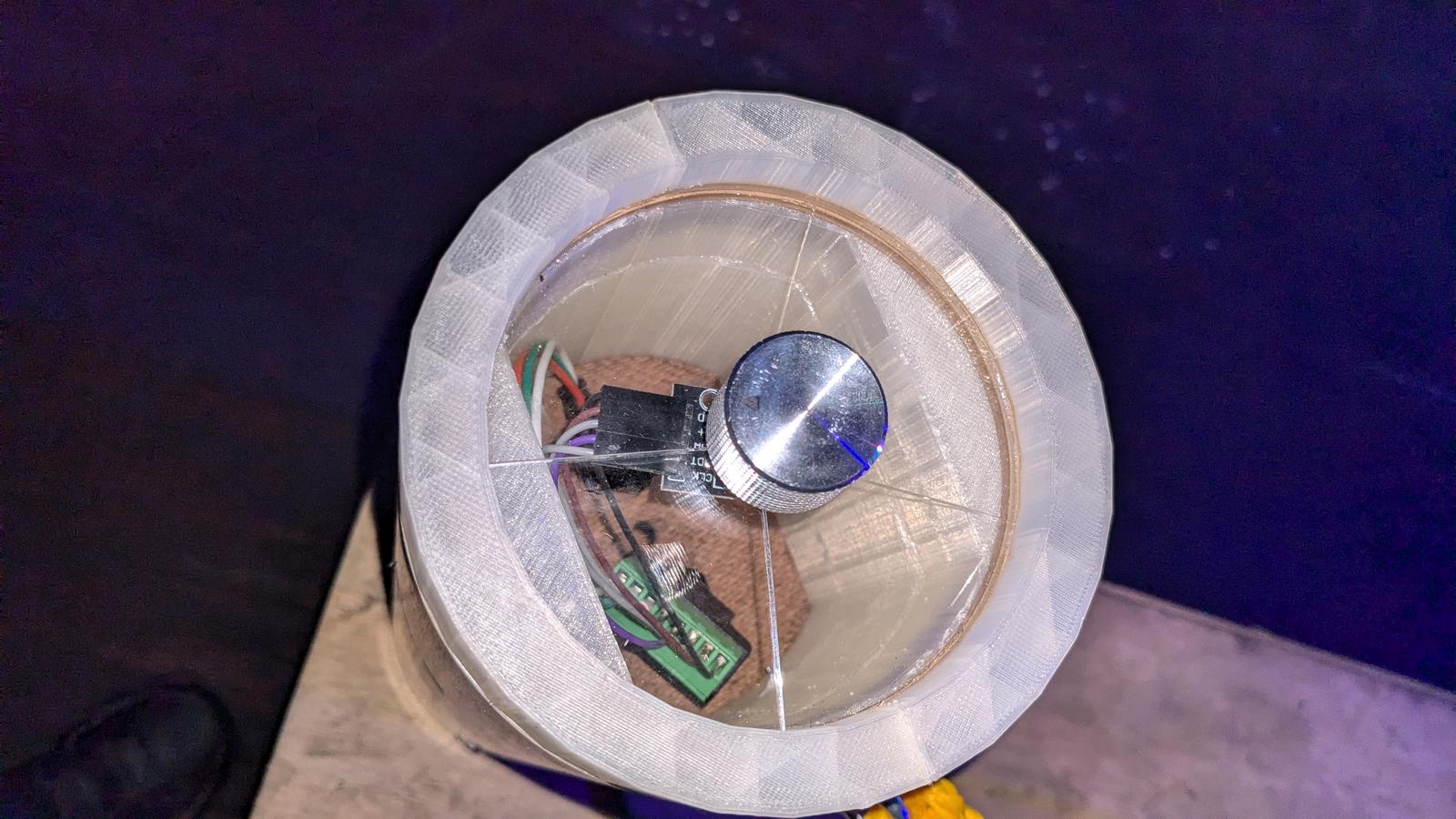

With that milestone cleared, and the display working properly, the race was on to build working controllers before IngenuityFest started on the 26th. The initial design we had in mind contained a section for an addressable LED ring light around the main controller knob, but we ran into both hardware and software limitations and had to cut it from the final design:

Software compatibility was the last hurdle. Most of the issues cleared up quickly, but there was one nagging problem that wasn't so easily solved: the encoder inputs would sporadically become inverted. The input processing code for this had been written from scratch, since adafruit-blinka didn't support it. Originally, the code looked like this:

from RPi import GPIO

import digitalio

class IncrementalEncoder:

def _pin_a_interrupt(self, pin_id):

self._count += 1 if self._prev_value_a == self._prev_value_b else -1

self._prev_value_a = not self._prev_value_a

def _pin_b_interrupt(self, pin_id):

self._count += -1 if self._prev_value_a == self._prev_value_b else 1

self._prev_value_b = not self._prev_value_b

def __init__(self, pin_a: digitalio.Pin, pin_b: digitalio.Pin, divisor: int = 4):

self.pin_a = pin_a

self.pin_b = pin_b

self.divisor = divisor

self._count = 0

GPIO.setup(pin_a.id, GPIO.IN)

GPIO.setup(pin_b.id, GPIO.IN)

GPIO.add_event_detect(pin_a.id, GPIO.BOTH, callback=self._pin_a_interrupt, bouncetime=20)

GPIO.add_event_detect(pin_b.id, GPIO.BOTH, callback=self._pin_b_interrupt, bouncetime=20)

self._prev_value_a = bool(GPIO.input(self.pin_a.id))

self._prev_value_b = bool(GPIO.input(self.pin_b.id))

@property

def position(self):

return self._count // self.divisor

import digitalio

from gpiozero import Button

class IncrementalEncoder:

def _pin_a_rising(self):

self._count += 1 if self._prev_value_b else -1

self._prev_value_a = True

def _pin_a_falling(self):

self._count += -1 if self._prev_value_b else 1

self._prev_value_a = False

def _pin_b_rising(self):

self._count += -1 if self._prev_value_a else 1

self._prev_value_b = True

def _pin_b_falling(self):

self._count += 1 if self._prev_value_a else -1

self._prev_value_b = False

def __init__(self, pin_a: digitalio.Pin, pin_b: digitalio.Pin, divisor: int = 4):

self.divisor = divisor

self._count = 0

self.pin_a = Button(pin_a.id, pull_up=None, active_state=True)

self.pin_b = Button(pin_b.id, pull_up=None, active_state=True)

self.pin_a.when_pressed = self._pin_a_rising

self.pin_a.when_released = self._pin_a_falling

self.pin_b.when_pressed = self._pin_b_rising

self.pin_b.when_released = self._pin_b_falling

self._prev_value_a = bool(self.pin_a.value)

self._prev_value_b = bool(self.pin_b.value)

@property

def position(self):

return self._count // self.divisor

First Contact with the Real World

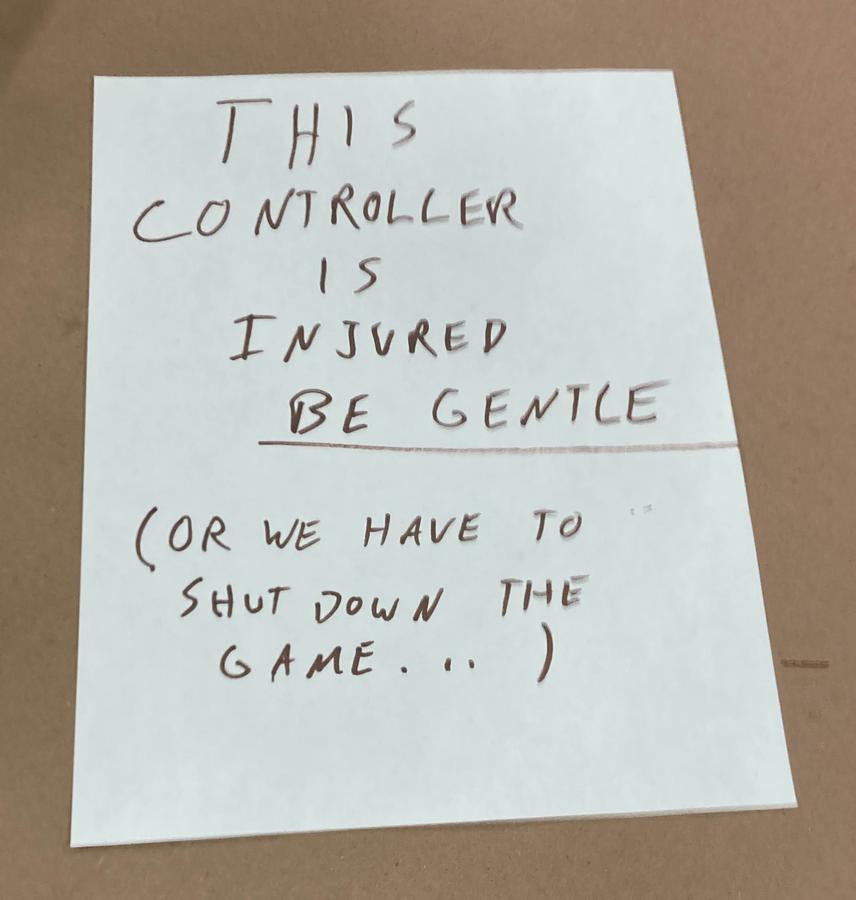

The first day of the festival was off to a pretty smooth start – both StarLords and the giant birthday cake were working correctly, with Ed explaining the game in his best "circus impresario" voice to throngs [2] of gathered attendees. I was mostly enjoying the show, when I happened back upstairs to find the area quiet and the game turned off. One of the controllers' clear acrylic plates had cracked, making the button no longer pressable and preventing the game from starting (since all four players had to click their buttons to start the game). Sam ginned up a quick fix with duct tape and added a sign to discourage further abuse.

Nevertheless, by the end of Thursday night, at least two towers had succumbed to the onslaught:

The cause of these failures were fairly clear: excited children and adults alike tended to slam the button much harder than we had anticipated in testing. The buttons' vertical placement also made it easy for adults to lean on it with most of their body weight. Replacement face-plates were duly created; three from 3D-printed plastic and one from a cut sheet of aluminum, both of which seemed to survive better than the acrylic and got us through the rest of the three-day event.

A software failure also presented itself: Every once in a while, the ball would get stuck bouncing between opposite walls out of the players' reach, bringing play to a halt. The only immediate way to resolve this was for one of us to intervene and power-cycle the entire control unit, which introduced an additional 30-second delay as the Pi rebooted. There seemed to be a few different scenarios present:

- The randomly-chosen initial ball velocity being too close to one of the four cardinal directions at the beginning of the game.

- Reflection at a 90-degree corner formed by a paddle against a wall.

- Bouncing freely in the space left once two players were eliminated on the same side of the board.

The first scenario was eliminated entirely by excluding initial ball angles that were too close to a multiple of pi / 4 (90 degrees):

# --------------- Before:

ball_angle = random.random() * 2.0 * math.pi

# --------------- After:

while True:

ball_angle = random.random() * 2.0 * math.pi

multiples_of_pi_4 = ball_angle / (math.pi / 4.0)

if abs(round(multiples_of_pi_4) - multiples_of_pi_4) >= 0.05:

break

# ---------------

self.ball_velocity = Vector2(initial_ball_speed * math.cos(ball_angle), initial_ball_speed * math.sin(ball_angle))

A second modification was made to solve this problem in the general case: The normal vector used to reflect the ball from the walls was given a random chance of being slightly adjusted by a small angle, as though the walls weren't perfectly smooth:

def _wall_normal_vector(self, base_vector: Vector2): # where base_vector is <1, 0>, <0, 1>, etc. depending on the wall

if random.random() < self.WALL_BOUNCE_DEFLECT_PROB:

theta = random.random() * (2 * self.WALL_BOUNCE_DEFLECT_MAX_ANGLE) - self.WALL_BOUNCE_DEFLECT_MAX_ANGLE

# Rotate base_vector by theta using the rotation matrix formula

sin_t, cos_t = math.sin(theta), math.cos(theta)

return v2(base_vector.x * cos_t - base_vector.y * sin_t, base_vector.x * sin_t + base_vector.y * cos_t)

else:

return base_vector

Overall, though, the Ingeneers and I were very pleased with the results of this first iteration, and we've continued to improve on it since. This article is long enough already, though, so I'll save those details for a separate one and end with a project recap video that Ed made:

Project Resources

Source code is freely available on my GitHub repository. More information about this and other projects by Ed Morra can be found on his website, big-fun-creative.com, as well as his Vimeo channel.

[1]This was an interesting story in and of itself: Apparently the executive happened to be substituting for a deliveryman at Ed's apartment building and happened to get into the elevator with Ed, at which point Ed introduced himself and gave a literal elevator speech about the project.

[2]OK, maybe not throng throngs, but a decent number of people.